An Evaluation of Machine Learning Methods forPredicting Flaky Tests

Image credit: Unsplash

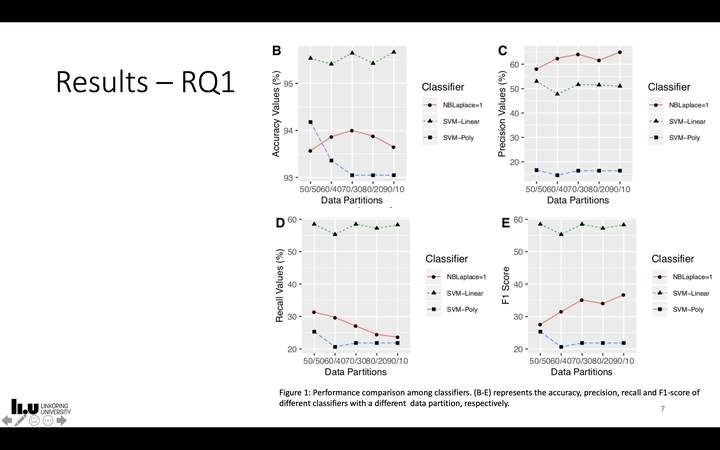

Image credit: UnsplashAbstract

In this paper we have investigated as a means of prevention the feasibility of using machine learning (ML) classifers for flaky test prediction in project written with Python. This study compares the predictive accuracy of the three machine learning classifers (Naive Bayes, Support Vector Machines, and Random Forests) with each other. We compared our fndings with the earlier investigation of similar ML classifers for projects written inJava. Authors in this study investigated if test smellsare good predictors of test fakiness. As developers need to trust the predictions of ML classifers, they wish to know whichtypes of input data or test smells cause more false negatives and false positives. We concluded that RF performed better whenit comes to precision (> 90%) but provided very low recall (< 10%) as compared to NB (i.e., precision < 70% and recall >30%)and SVM (i.e., precision < 70% and recall >60%)